How Automated Remediation Impacts Security Teams

If you talk to anyone in the defensive information security profession who has been doing this for more than a few years, you will recognize that we share many things in common; one big thing is that we’re all exhausted. Defensive security teams are tasked with a mind-boggling variety of responsibilities, told that we have to prevent breaches and incidents that would put the company in the news at all costs, but also often told “Oh, and don’t get in anyone’s way or do anything that might interfere with sales/marketing/product development/the CEO’s pet project.” We’re all overwhelmed and under-resourced and we are constantly looking for new tools that can help us make better progress on our goals within the limitations that we’ve been given.

Unfortunately, our options there are often pretty limited. The push toward AI and ML as the saviors of the tech industry have left us with noisy tools that are difficult to interpret, with results we can’t trust. The focus on near-zero false negatives (no missed alerts) from these and other tools makes them so noisy and full of false positives that we can’t take any action without extensive investigation and research by the security team, increasing the workload that we were hoping to decrease.

Here at OpsHelm, we’re hoping to provide a beacon in this storm of unhelpful tools: a solution that focuses on finding the big, undeniable problems that you definitely don’t want to see and fixing them automatically for you. This gives you the peace of mind that those issues are taken care of in a way that you understand and can explain to your management, and allows you to perform any follow-up root cause analysis at your own pace without worrying that any delay is increasing your risk.

The deceptive allure of AI

Having spent many years on the security side of various companies, I can tell you from first-hand experience how difficult it is to trust security solutions. As a member of the security team, the responsibility to keep your systems functioning falls mostly on your shoulders. How are you supposed to know which security solution to trust when vendors are never all that interested in explaining how their product actually works? It’s always: “Trust us, trust the black box, it does — insert AI magic here.” AI and anomaly detection are great buzzwords to justify a higher price tag and let a vendor’s sales team claim that, of course, the tool can detect X, Y, or Z, but letting them form the core of your security tooling is a recipe for disaster.

For example, I had the pleasure of working with a network security tool, which used full packet capture devices to collect packets from a number of choke points, analyzed them for security events, and output alerts to the security operations team. This tool provided a pretty graphical representation of the traffic it was analyzing—another single pane of glass to check—along with alerts. However, because it was apparently using a proprietary, heuristic, machine learning, super secret, anomaly detection engine (ahem), the reason, and sometimes source, of the alerts was opaque to the security team. Alerts would arrive declaring that “something bad” had occurred “somewhere”, and here was the offending packet.

Of course, this is not actionable information without further context. Is this alert valid? How can we tell if it’s valid without knowing how it was derived? If this determination cannot be made, do we blindly trust the alert and risk wasting time and resources on tracking it down, or do we discard it and risk failing to act on a valid security event? Can we even do anything?

So, if security doesn’t have the time to investigate every one of these, why don’t they just pass them on to the responsible teams to review and either justify or act upon? Here’s why: security teams tend to have a finite amount of social capital to spend with other internal teams, which they draw down each time they involve others in something which turns out to be a false positive. It is rare that a security team can repeatedly ask other teams to investigate a false positive without earning a reputation. It has been my experience that security teams need to be careful with spending their social capital, which means only raising the alarm once they have high-quality, actionable information to share.

Returning to the vendor in question, their overall approach to alert opacity could be summarized as a “trust us, we know best”. It’s entirely possible that they did, in fact, know best with regards to if the alert is valid or not. However, this will cause the team tasked with triaging such alerts to expend time determining the cause of the issue, the location of the issue, and sometimes if there even is an issue. When a security alert is received, the team’s ability to quickly and easily triage it for false positives and make a determination on its potential impact makes a massive difference in their ability to respond effectively.

While this decision by the vendor’s legal and marketing teams does make some business sense, it has some unpleasant side effects for their customers. Often the desire that we saw from this vendor to protect intellectual property—or sometimes to obfuscate a not completely working product—inadvertently leads to a product that customers feel they cannot trust. Not to mention that “we trust the third party closed system” does not work with regulatory compliance or customers.

Going beyond alerts

What’s more, even if you do trust these companies to solve your problems in a black box, they often are just finding problems, the AI magic doesn’t actually solve anything. The security engineer gets a list of alerts in their inbox and it is now their job to either identify and fix the underlying cause or to convince another team to do so. The vendors know that their alerts can’t be relied upon, so they stop there and leave everything else up to you. This creates loads of extra work and stress for the security team.

What that means on the security side is that you spend a lot of time testing and assessing which solutions you can trust and which will actually reduce your workload. For many years I searched for a tool that openly and transparently tells me how it works and how it’s going to optimize my system, my controls, and my time.

I have worked with multiple solutions over the years which, in theory, attempt to do just that: provide optimization of systems, controls, and processes. These solutions end up attempting to ensure a security event would never be missed and create alerts for everything, whether it is relevant to the environment it is protecting or not. For example, one network intrusion detection solution I have worked with, in an effort to never miss an event, had rules which were as simple as a TCP connection to a specific port. Why? Because a remote access tool was known to run on that port and the vendor believed they could not risk failing to alert on a connection to a remote access tool.

The quality of the rule, and hence the alert, was so low that any connection to that port could trigger the alert, and did, regularly.The result of this is not some kind of utopian security “full spectrum visibility”, but rather a deluge of alerts that frustrate the security operations team, use up valuable resources, and rapidly earn the platform the reputation of generating false positives. Alert fatigue leads to teams ignoring, or at least deprioritizing, alerts from that system. This quite famously and publicly happened to Target in 2014 when their vendor created a valid alert to a security event which led to a breach, but it “did not warrant immediate follow-up”. This is not to say that a vendor has to be infallible, however, the quality and fidelity of alerts should be of a net benefit to the team, not a source of additional work.

And so we built one — a security platform that finds, analyzes and fixes exposed misconfigurations in your system without asking anything from the security engineer. Sure, they can go back and look at what’s been done and even undo it if they find it necessary. But the work is done for them, and there are no secrets about how it works.

What OpsHelm is and How it Works

OpsHelm is an automation platform that applies guardrails to cloud environments in order to make certain classes of security misconfiguration impossible. This eliminates a number of repeatable tasks that suck time out of the security team’s week and protects operations engineers in real-time so they can work faster without worrying about creating new exposures.

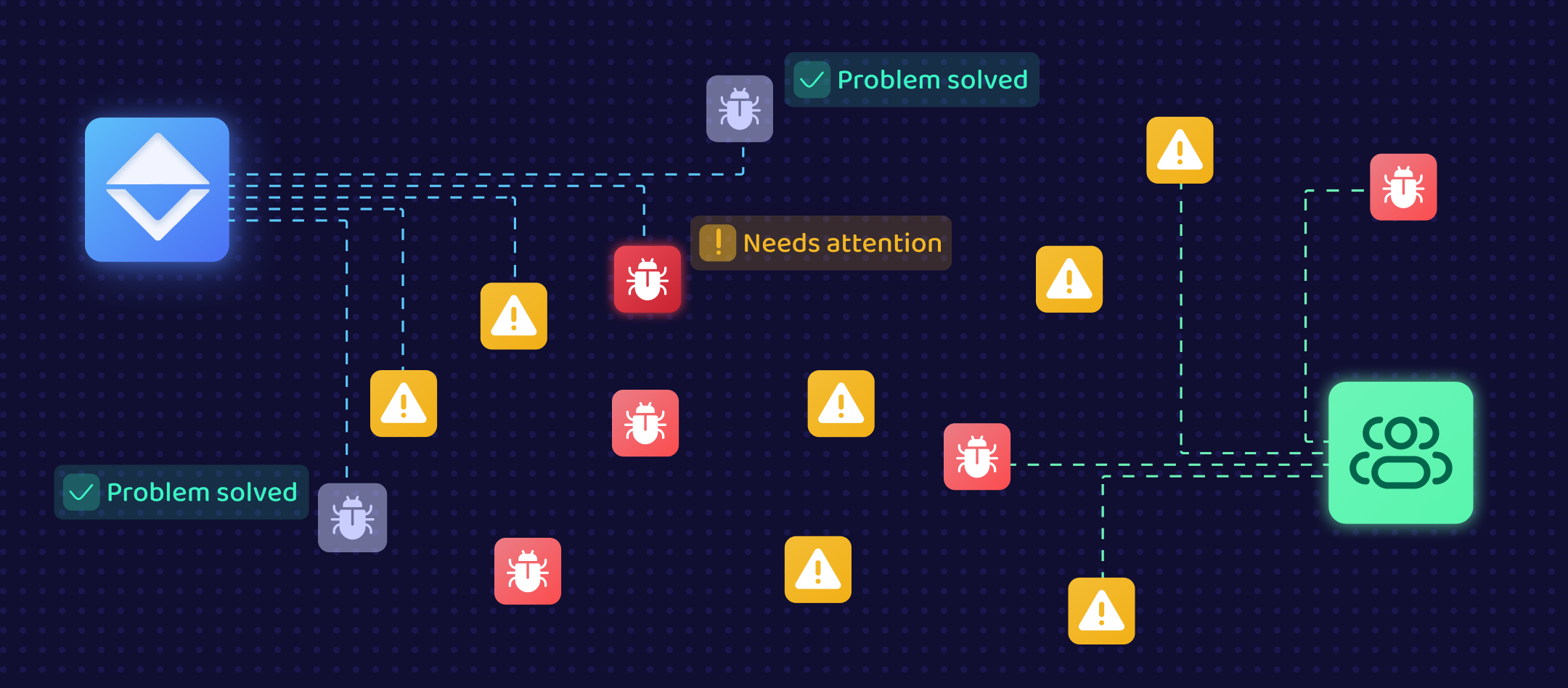

Here’s how it works: We continuously ingest cloud log events and periodically scan your environment to map and understand its current configuration. As changes come in we review them against predefined lists of rules or even custom rules. If OpsHelm detects a pattern that we know to be a bad pattern, then we have the option of automatically remediating and applying a different configuration.

Where a traditional tool will do a lot of the same sort of scanning and mapping, they’ll mostly just return a long list of things to fix and will not catch things until their next scan. And some of them are better at prioritizing lists than others so that you at least know the riskiest items are at the top of the list. Each available tool will give you varying levels of instruction for how to fix it. But none of them fix it for you.

This is what OpsHelm can do that the other products cannot. It’s why I am excited about what our platform can do for security engineers. So, yes, we do find and prioritize risks just like the others, but the real differentiation of our product lies in automated remediation. We fix the misconfigurations for you, so you can operate confidently without ever even needing to know they existed in the first place.